# Import pandas for data manipulation and matplotlib for visualization

import pandas as pd

import matplotlib.pyplot as plt

# If not installed, you can run in the terminal:

# python3 -m pip install matplotlib plotly

# python3 -m pip install pandas

# or windows

# py -m pip install matplotlib plotly

# py -m pip install pandasWorkflow & Reproducible Research

Orientation: Code Along

Agenda

Python Code-Along

Data-Intensive Research Workflow

- Prepare

- Wrangle

- Explore

- Model

- Communicate

Prepare

The Study

The setting of this study was a public provider of individual online courses in a Midwestern state. Data was collected from two semesters of five online science courses and aimed to understand students’ motivation.

Research Question

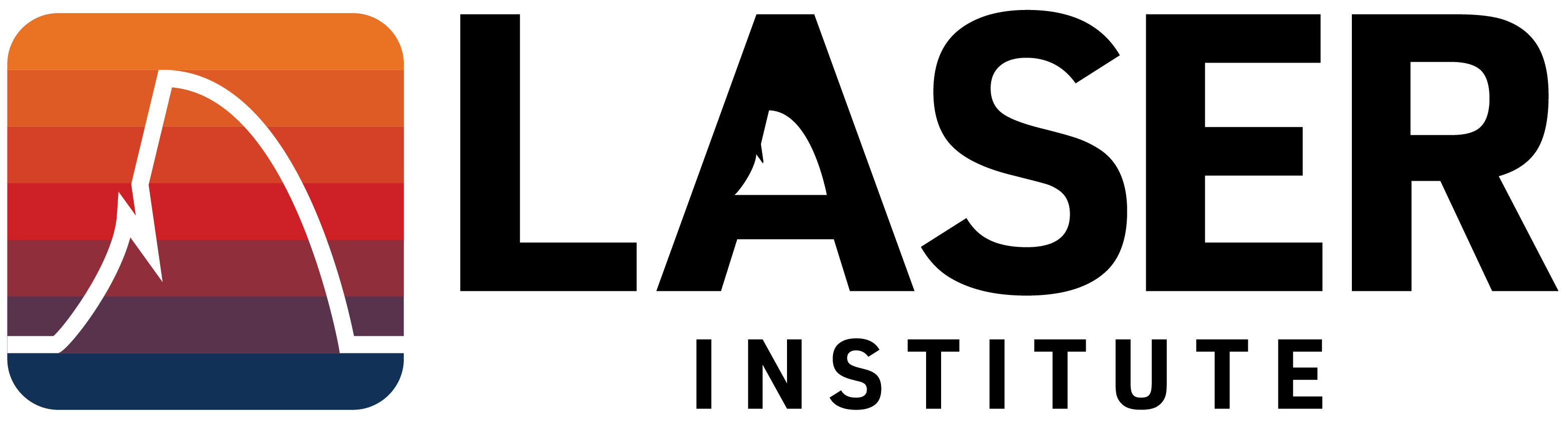

Is there a relationship between the time students spend in a learning management system and their final course grade?

The Tools of Reproducible Research

In Python, packages are equivalent to R’s libraries, containing functions, modules, and documentation. They can be installed using pip and imported into your scripts.

The pandas library in Python is used for data manipulation and analysis. Similar to the {readr} package in R the function like pd.read_csv() is for importing rectangular data from delimited text files such as comma-separated values (CSV), a preferred file format for reproducible research.

Inspecting the dataset in Python can be done by displaying the first few rows of the DataFrame.

student_id course_id total_points_possible total_points_earned \

0 43146 FrScA-S216-02 3280 2220

1 44638 OcnA-S116-01 3531 2672

2 47448 FrScA-S216-01 2870 1897

3 47979 OcnA-S216-01 4562 3090

4 48797 PhysA-S116-01 2207 1910

percentage_earned subject semester section \

0 0.676829 FrScA S216 2

1 0.756726 OcnA S116 1

2 0.660976 FrScA S216 1

3 0.677335 OcnA S216 1

4 0.865428 PhysA S116 1

Gradebook_Item Grade_Category ... q7 q8 q9 \

0 POINTS EARNED & TOTAL COURSE POINTS NaN ... 5.0 5.0 4.0

1 ATTEMPTED NaN ... 4.0 5.0 4.0

2 POINTS EARNED & TOTAL COURSE POINTS NaN ... 4.0 5.0 3.0

3 POINTS EARNED & TOTAL COURSE POINTS NaN ... 4.0 5.0 5.0

4 POINTS EARNED & TOTAL COURSE POINTS NaN ... 4.0 4.0 NaN

q10 TimeSpent TimeSpent_hours TimeSpent_std int pc uv

0 5.0 1555.1667 25.919445 -0.180515 5.0 4.5 4.333333

1 4.0 1382.7001 23.045002 -0.307803 4.2 3.5 4.000000

2 5.0 860.4335 14.340558 -0.693260 5.0 4.0 3.666667

3 5.0 1598.6166 26.643610 -0.148447 5.0 3.5 5.000000

4 3.0 1481.8000 24.696667 -0.234663 3.8 3.5 3.500000

[5 rows x 30 columns]What variables do you think might help us answer our research question?

Python syntax for reading and inspecting data can be intuitive and powerful. For example:

sci_data = pd.read_csv("data/sci-online-classes.csv")

- Functions are like verbs: pd.read_csv() is the function used to read a CSV file into a pandas DataFrame.

- Objects are the nouns: In this case, sci_data becomes the object that stores the DataFrame created by pd.read_csv(“data/sci-online-classes.csv”).

- Arguments are like adverbs: “data/sci-online-classes.csv” is the argument to pd.read_csv(), specifying the path to the CSV file. Unlike R’s read_csv, the default behavior in pandas is to infer column names from the first row in the file, so there’s no need for a col_names argument.

- Operators are like “punctuation”: = is the assignment operator in Python, used to assign the DataFrame returned by pd.read_csv(“data/sci-online-classes.csv”) to the object sci_data.

Wrangle

Data wrangling is the process of cleaning, “tidying”, and transforming data. In Learning Analytics, it often involves merging (or joining) data from multiple sources.

- Data wrangling in Python is primarily done using pandas, allowing for cleaning, filtering, and transforming data.

Since we are interested the relationship between time spent in an online course and final grades, let’s select() the FinalGradeCEMS and TimeSpent from sci_data.

Explore

Exploratory data analysis in Python involves processes of describing your data numerically or graphically, which often includes:

calculating summary statistics like frequency, means, and standard deviations

visualizing your data through charts and graphs

EDA can be used to help answer research questions, generate new questions about your data, discover relationships between and among variables, and create new variables (i.e., feature engineering) for data modeling.

The workflow for making a graph typically involves:

Choosing the type of plot or visualization you want to create.

Using the plotting function directly with your data.

Optionally customizing the plot with titles, labels, and other aesthetic features.

Scatter plots are useful for visualizing the relationship between two continuous variables. Here’s how to create one with seaborn.

Model

Before adding a constant or fitting the model, ensure the data doesn’t contain NaNs (Not a Number) or infinite values. In a pandas DataFrame, you can use a combination of methods provided by pandas and NumPy.

import numpy as np

# Drop rows with NaNs in 'TimeSpent' or 'FinalGradeCEMS'

sci_data_clean = sci_data.dropna(subset=['TimeSpent', 'FinalGradeCEMS'])

# Replace infinite values with NaN and then drop those rows (if any)

sci_data_clean.replace([np.inf, -np.inf], np.nan, inplace=True)

sci_data_clean.dropna(subset=['TimeSpent', 'FinalGradeCEMS'], inplace=True)You can use libraries such as statsmodels or scikit-learn. We’ll dive much deeper into modeling in subsequent learning labs, but for now let’s see if there is a statistically significant relationship between students’ final grades, FinaGradeCEMS, and the TimeSpent in the LMS:

OLS Regression Results

==============================================================================

Dep. Variable: FinalGradeCEMS R-squared: 0.134

Model: OLS Adj. R-squared: 0.132

Method: Least Squares F-statistic: 87.99

Date: Fri, 19 Jul 2024 Prob (F-statistic): 1.53e-19

Time: 12:14:17 Log-Likelihood: -2548.5

No. Observations: 573 AIC: 5101.

Df Residuals: 571 BIC: 5110.

Df Model: 1

Covariance Type: nonrobust

==============================================================================

coef std err t P>|t| [0.025 0.975]

------------------------------------------------------------------------------

const 65.8085 1.491 44.131 0.000 62.880 68.737

TimeSpent 0.0061 0.001 9.380 0.000 0.005 0.007

==============================================================================

Omnibus: 136.292 Durbin-Watson: 1.537

Prob(Omnibus): 0.000 Jarque-Bera (JB): 252.021

Skew: -1.381 Prob(JB): 1.88e-55

Kurtosis: 4.711 Cond. No. 3.97e+03

==============================================================================

Notes:

[1] Standard Errors assume that the covariance matrix of the errors is correctly specified.

[2] The condition number is large, 3.97e+03. This might indicate that there are

strong multicollinearity or other numerical problems.Communicate

Krumm et al. (2018) have outlined the following 3-step process for communicating finding with education stakeholders:

Select. Selecting analyses that are most important and useful to an intended audience, as well as selecting a format for displaying that info (e.g. chart, table).

Polish. Refining or polishing data products, by adding or editing titles, labels, and notations and by working with colors and shapes to highlight key points.

Narrate. Writing a narrative pairing a data product with its related research question and describing how best to interpret and use the data product.

What’s Next?

Our First LASER Badge! Next you will complete an interactive “case study” which is a key component to each learning lab.

Navigate to the Files tab and open the following file:

laser-lab-case-study.RMD Change this to the new one

Essential Readings

Reproducible Research with R and RStudio (chapters 1 & 2)

Learning Analytics Goes to School (pages 28 - 58)

Acknowledgements

This work was supported by the National Science Foundation grants DRL-2025090 and DRL-2321128 (ECR:BCSER). Any opinions, findings, and conclusions expressed in this material are those of the authors and do not necessarily reflect the views of the National Science Foundation.

]

]